How Do Vision Transformers See Depth in Single Images?

Investigating the visual cues that vision transformers use for monocular depth estimation. This is an extension of an analysis originally done for convolutional neural networks.

Introduction

Monocular depth estimation is a task in Computer Vision, where a depth map is estimated from a single input image. This differs from approaches like SLAM or Structure-from-Motion, where multiple camera images can be used to estimate the geometry in the scene. It is often assumed that monocular depth estimation algorithms, similar to human vision, make use of so-called pictorial cues like the apparent size of a known object or its position in relation to the horizon line

Van Dijk and de Croon published a paper that investigated the visual cues that neural networks exploit when trained for monocular depth estimation

Van Dijk and de Croon’s paper is fairly unique in its in-depth analysis of intentionally constructed images to infer the inductive biases of the neural networks. This type of analysis lends itself well for image-based approaches, since the manipulation of annotated images to construct synthetic example images with specific properties is fairly easy.

Very recent advances in Natural Language Processing make use of the transformer architecture

In this blog post we will extend Van Dijk et. al. original experiments to include the transformer-based architecture DPT for monocular depth estimation. We will see if this type of black box analysis, that tests specific visual cues and the network’s responses can help us understand the inductive biases of vision transformers.

Methodology

Pictorial Cues

Van Dijk and de Croon’s original publication initially reviews literature on pictorial cues that humans use when estimating distance. These pictorial cues could then be used as a starting point to conduct specific tests with the KITTI data

The pictorial cues that Van Dijk and de Croon initially focus on are the apparent size of objects and their position in the image. The apparent size of objects relies on the observation that objects further away appear smaller. This pictorial cue relies on having a certain understanding about what type of obstacle appears in the image and knowing its typical size.

The later experiments on changing the camera pose are motivated from the intuition that the neural networks implicitly learn a certain camera pose during training. In the KITTI dataset, this would be a camera pose that points parallel to the ground surface and has a fixed height above ground, where the camera is mounted in the vehicle. The performance of the neural networks after pitching and rolling the camera has interesting implications on the robustness of using the network with a different camera setup.

The final experiments focus on the visual cues that could be affected by the visual appearance of the obstacle in the image. Here the performance of the neural networks for arbitrary obstacles that don’t appear in the KITTI training set were tested. The presence of a shadow unter the object also appears to have a strong effect on the detection performance.

Vision Transformers

Vision transformers make use of the transformer architecture that has shown success in Natural Language Processing and other sequence modeling tasks

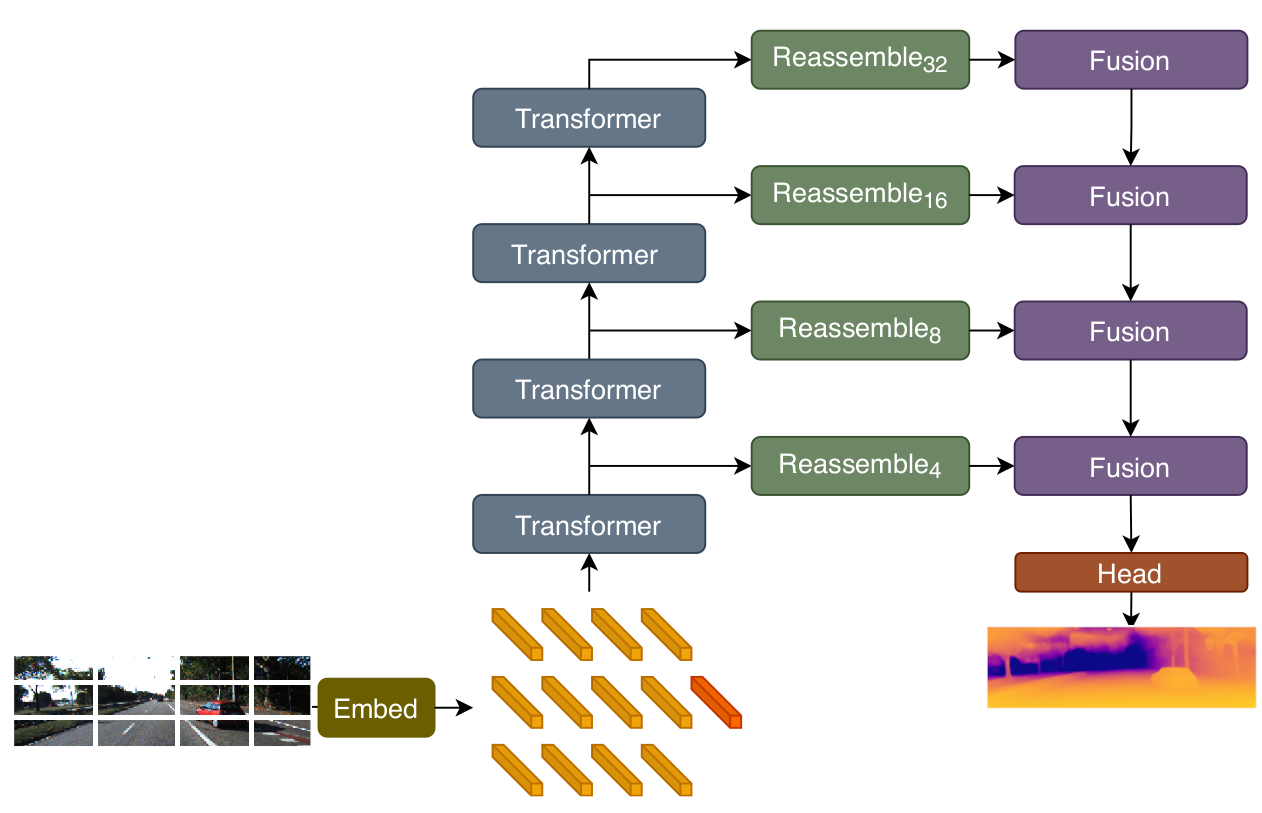

The original transformer expected a sequence of tokens as input. The vision transformer converts non-overlapping patches of the input image into a flattened representation to resemble tokens. An additional positional embedding is added to the token to provide information to the network about the position of each token in the sequence (see Figure 1). DPT also adds a patch-independent readout token, that intends to add a global image representation. In particular, we are evaluating the performance of DPT-Hybrid, which uses a ResNet-50 feature extractor to create embeddings.

The treatment of the image as a sequence of patches and each patch as a flattenend linear projection of the image patch could have disadvantages for image data. By flattening the image patch to a one-dimensional token you lose information about neighborhood pixels within the patch. The position embedding only provides a relationship between the patches on a higher level. This leads to the conclusion that vision transformers lack an inductive bias in modeling local visual structures

Experiments

In the following experiments we want to present the observations made by van Dijk and de Croon in their original work and our observations made using vision transformers. This format as a blog post with interactive elements lends itself well to revisit the original results from a new perspective.

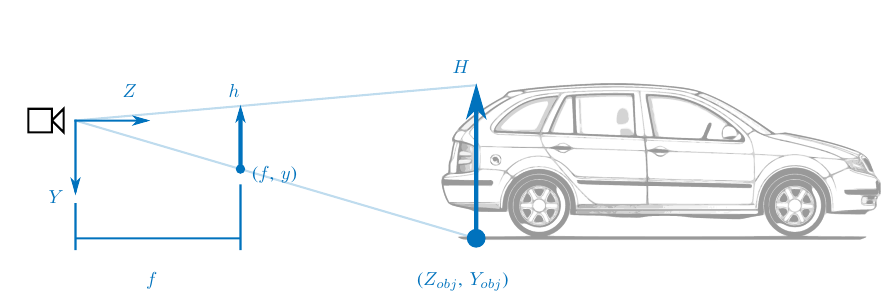

Position vs. Apparent Height

The distance of an object could geometrically be estimated by two different approaches from a single image (see Figure 2). Both approaches assume a known and constant focal length $f$, which a neural network implicitly learns when trained on a dataset taken with the same camera setup. The first approach estimates the object’s real-world height $H$ and compares it to its apparent height $h$ on the image plane.

\[Z_{obj} = \frac{f}{h} H\]This approach requires a good estimate of the real-world height $H$ of the objects present in the KITTI dataset. The limited number of obstacle classes (cars, trucks, pedestrians) in the KITTI dataset all share roughly the same height within each class. It is therefore plausible for the neural network to have learned to recognize these objects and use their apparent height as estimate for the distance. This approach relies only on the scale of the object for the distance estimate.

The other approach requires the additional assumption of a flat road surface. This assumption is valid in many urban environments. For this approach we look at the vertical position $y$ in the image, where the bottom part of the object intersects with the flat ground surface. We will call it the ground contact point from now on. The ground contact point can be compared in relation to the camera’s height $Y$ over the ground surface to estimate the distance:

\[Z_{obj} = \frac{f}{y} Y\]Most scenes in the KITTI dataset are recorded on a flat road surface and the camera’s height above ground $Y$ remains constant across the dataset. Making a detection based on the position of the ground contact point $y$ in the image is also within the KITTI dataset. This approach relies only on the position of the object for the distance estimate.

Van Dijk and de Croon created a set of test images to estimate the effect of the scale and position of obstacles on the depth estimate accuracy. The test images consist of objects (mostly cars) that are cropped from different KITTI images and placed into open spaces of other images in the KITTI dataset. These crops are only done on images, where the scene remains plausible after the insertion.

The accuracy of the depth estimate is measured by the relative distance of the new object $Z_{new}$ from the distance the object originally appeared in $Z_{new} / Z = 1$. The relative distance is increased in 0.1 steps up to $Z_{new} / Z = 3$ The relative distance increase is done in three different manners (see Figure 3):

- Position and Scale: here we change the ground contact point and the scale of the object when increasing the relative distance

- Position Only: here we only change the ground contact point and keep the original scale of the object

- Scale Only: here we keep the original ground contact point fixed and change the scale of the object

Comparing the predicted depths of both neural networks on these different objects in these 3 configurations should give us an idea how much both of these visual cues are used for the monocular depth estimation (see Figure 4).

Camera Pose

The neural networks require some knowledge about the camera’s height above the ground surface and its pitch to estimate depth towards obstacles based on the vertical image position. The camera in the KITTI dataset is fixed rigidly to the car. The only deviations in its orientation come from pitch and heave motions of the car suspensions or from slopes in the terrain, which do not often occur in the urban environment of the KITTI dataset.

Van Dijk and de Croon propose two different approaches on how the camera pose is estimated by the neural networks.

- Fixed Camera Pose: here the neural networks assume a fixed camera pose in all images. The fixed camera pose allows the neural network to directly infer the depth towards an obstacle from the vertical image position. The assumption of a fixed camera pose across all images prevents the trained network from being directly transfered to another vehicle with a different camera setup.

- On-the-fly Pose Estimation: here the neural networks make use of visual cues like vanishing points or horizon lines to estimate its camera pose. A vertical shift of the horizon line in the image can give an indication on a change in pitch.

We repeat the following experiment, that was proposed by Van Dijk and de Croon to determine the effects of a change in pitch. This expirement should provide use an indication how much the neural networks assume a fixed camera pose or use visual cues like the horizon to estimate he camera pose on-the-fly for each input image. For this we take a smaller crop of the original KITTI image at different heights. From the image center, the area of interest in the crop is shifted by $\pm 30$px vertically from the image center. This translated to an approximate change in camera pitch of $\pm 2$-$3$ degrees.

We then compare how much the horizon shift in the predicted depth map corresponds with the true horizon shift produced by shifting the vertical position of the cropped image (see Figure 5). The horizon line in the predicted depth map is determined by fitting a line along disparity values of zero (i.e. infinite distance) along the central region of the KITTI images. If the predicted horizon line is shifted as expected with the synthetic change of pitch, then the neural network most likely does not just learn an absolute position for the horizon line in the input images.

We can observe in the previous figure that DPT can better account for the change in pitch and its effect on the horizon line in the cropped image data than the original MonoDepth could. DPT was trained on a larger meta-dataset called MIX 6

Obstacle Recognition

The previous experiments have shown that the vertical position of objects in the image is an important visual cue for depth estimation. The vertical position can be determined by finding the object’s ground contact point. This does not require additional knowledge about the type of object, suggesting that the depth estimate can be done for arbitrary objects.

Van Dijk and de Croon did a qualitative analysis on how well MonoDepth could detect obstacles that were uncommmon in the KITTI dataset. We compared DPT’s performance on the same task (see Figure 3 below).

Cropping out parts of familiar obstacles can provide some insight on the visual cues that more strongly lead to the detection of the object by the neural networks. Here we also look into the DPT’s performance on the same input images (see Figure 7 below).

Conclusions

Experiment Results

In this blog post we reproduced the original results on MonoDepth and extended them with experiments on the transformer-based neural network DPT. We generally observed an improved performance with the more recent DPT network.

DPT still uses the ground contact point as an important visual cue for the depth estimation of obstacles, but is less reliant on it as the original MonoDepth was. For DPT the scale of the known object also contributes for a more accurate depth estimation.

DPT can better handle changes in camera pitch than the original MonoDepth. We evaluated this on a DPT model that was pre-trained on the larger and diverse set of depth images than the original MonoDepth. So this could be the determining factor here instead of the difference in network architecture between transformer-based DPT and CNN-based MonoDepth.

DPT is able to detect unusual objects (e.g. fridge) as obstacles in the scene even if the drop shadow as visual cue is absent. MonoDepth heavily relies on the dark border on the ground surface for obstacle detection. Thin vertical obstacles (e.g. parts of the car obstacle) are better detected by DPT than MonoDepth. Here again, the diversity in the pre-training data for DPT could explain the more robust detection of unusual obstacles.

For both models, we observed the issue of detecting a thin horizontal obstacle (e.g. not detecting the top part of the car). This is a known issue in stereo vision caused by the often horizontal alignment of the camera pairs

We see a lot of value in the analysis of neural networks based on these type of experiments. The analysis can easily be extended to more neural networks and could be part of the evaluation dashboard used during architecture development and training evaluation. There are of course issues in this black box view on each of these networks

Comments on Blog Format

We believe, that this interactive blog format lends itself well to allow a reader a better interaction with the results obtained by different algorithms. The data can be condensed in a form to take up less screen space and be presented as part of the main text instead of being made part of the supplementary material.

Modern plotting libraries like Plotly allow for HTML exports, which make the process to creating interactive visualizations for the web fairly easy. We see this approach to web publishing as a middle road between the now common large PDF publications with many embedded images and the time consuming process of creating visualizations for platforms like the former Distill